Bert Auburn Mom - A Look At Language Technology

Have you ever stopped to think about how your phone or computer seems to just get what you mean, even when you type something a little strange? It's like there's an invisible helper making sense of all the words. This quiet revolution in how machines deal with human talk is, in a way, powered by something called BERT. It’s a pretty big deal in the world of computers that deal with language, and it helps make our digital lives a little smoother, especially for someone busy like an Auburn mom trying to keep up with everything.

This powerful system, you know, has changed how computers understand what we write and say. It’s not just about matching words; it’s about grasping the true meaning, the subtle hints, and the way words fit together. This means better searches when you are looking for that perfect recipe, or when you are asking a smart speaker for help with a schedule. It’s all about getting computers to think more like people when it comes to language, which is a rather significant step forward.

So, what does this mean for you, perhaps as an Auburn mom juggling school pickups, grocery lists, and maybe even a little bit of work? Well, it means the tools you use every day are becoming more helpful, more intuitive. The technology behind BERT works behind the scenes, making sure your digital assistants are truly assisting, and that information is easier to find. It’s about making the digital conversations you have with your devices feel a bit more natural, a little more like talking to someone who actually gets it.

- Avanti Delay Repay Login

- Pittsburgh Tribune Review Live

- John Velasquez

- Onlyfans Lowkeydeadinside

- The Commonsense Show

Table of Contents

- Understanding Bert - A Look at How It Works

- What Makes Bert So Special for an Auburn Mom?

- Is Bert Really Better Than Older Ways?

- Getting to Know Bert's Inner Workings

Understanding Bert - A Look at How It Works

Bert, which stands for Bidirectional Encoder Representations from Transformers, is a pretty important name in the world of computers trying to understand human talk. It’s a language representation model, which means it’s built to help computers make sense of words and sentences. Think of it like a very, very diligent student who studies how words are used in all sorts of different writings. This system was first shown to the public in October of 2018 by some clever folks at Google. Its main goal is to learn how to represent text as a string of meaningful pieces, making it easier for machines to process what we write.

The Core Idea Behind Bert and Your Auburn World

The main idea behind Bert is that it looks at words in a sentence from both directions at once. So, it doesn't just read from left to right, or right to left, but it considers the whole sentence all at once. This is a bit like how we, as people, read a sentence; we don't just process one word after another in a straight line. We understand how the words before and after a certain word give it its full meaning. This bidirectional approach is a big reason why Bert is so good at what it does, and it really helps computers get a fuller picture of what a sentence is trying to say. It helps make sure that when you ask your smart device a question, it truly understands the context, which is that much more helpful for an Auburn mom with a busy schedule.

Older systems, you know, might have struggled with this. Some of them, like one called ELMo, would put together information from separate left-to-right and right-to-left readings. This kind of piecing together often meant that the full meaning wasn't quite captured. Bert, on the other hand, uses something called a Transformer, which is a kind of network structure. This Transformer is really good at pulling out important features from the text, and it does so in a way that truly brings together the meanings from both directions. This integrated way of gathering information is a key reason why Bert often performs so much better.

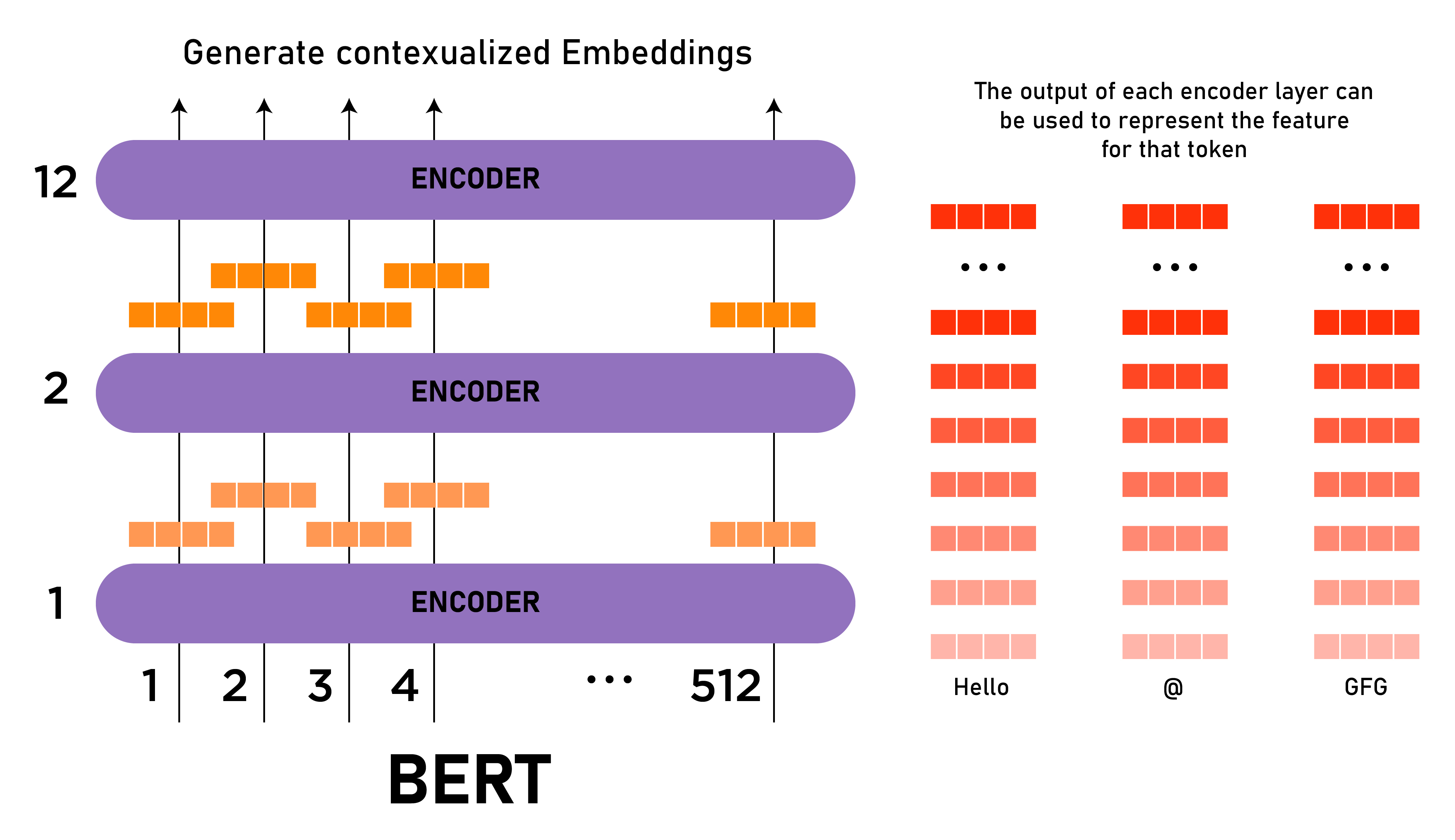

The system is, in essence, a stack of many Transformer Encoder layers. These layers are what give it its ability to look at all the words in a sentence at the same time and understand how they relate to each other. This is often called "self-attention" within the Transformer setup. It allows Bert to focus on different parts of a sentence as it tries to figure out the meaning of a specific word. For instance, if you type "I saw a bat," Bert can figure out if you mean a flying animal or a piece of sports equipment based on the other words in the sentence, or even the sentence that comes after it. This kind of deep understanding makes a real difference in how helpful digital tools can be for an Auburn mom.

What Makes Bert So Special for an Auburn Mom?

So, you might be thinking, "That's all very interesting, but what does this mean for me?" Well, Bert is special because it makes our digital interactions much more natural and effective. Think about searching for information online. When you type a question into a search engine, Bert helps the computer understand the true intent of your question, not just the individual words. This means you get more relevant results faster, which is pretty handy when you are trying to find the best local playground or the hours for a specific store. It truly cuts down on the time you spend sifting through unhelpful links.

How Bert Helps with Everyday Tasks for an Auburn Family

Consider, for a moment, how Bert's abilities might touch the daily life of an Auburn family. If you use voice assistants to set reminders, play music, or get weather updates, Bert is likely helping those assistants understand your commands better. It means fewer frustrating moments where the device misunderstands what you are asking. For instance, if you say, "Remind me to pick up the kids at three," Bert helps the system grasp that "kids" refers to your children and "three" means 3:00 PM, even if you don't say "PM." This kind of precision makes these tools genuinely useful, which is something every Auburn mom can appreciate.

It also makes things like text classification much more accurate. For example, if you are sorting through emails, Bert can help identify spam or categorize messages more effectively. This means less time wasted on unwanted messages and more time focused on what truly matters. In a way, it’s like having a very smart assistant that understands the nuances of human language, quietly working in the background to make your digital life a bit more organized and less cluttered. This kind of background work truly helps keep things running smoothly for an Auburn family.

Is Bert Really Better Than Older Ways?

The short answer is, yes, in many ways, it is. When Bert was first introduced, it really changed the game for how computers deal with language. Before Bert, many systems used older methods, like the LSTM method, to pull out important bits of information from text. However, these older methods often weren't as good at grasping the full context of a sentence. It’s a bit like trying to understand a conversation by only hearing one word at a time, rather than the whole sentence. Bert, you know, brought a new level of understanding to the table.

The Strength of Bert's Design for Auburn Life

One of the main reasons Bert is so effective comes from its unique design. As we talked about, it’s built from many layers of something called a Transformer Encoder. This design allows it to look at all the words in a sentence at the same time and truly understand how they relate to each other, no matter where they are in the sentence. This is a very different approach from older methods that might have processed words one after another. This ability to see the whole picture at once gives Bert a much deeper grasp of meaning, which translates into better performance in all sorts of language tasks. This strong design means more reliable digital tools for your Auburn life.

Another point that makes Bert particularly strong is how it learns. It was trained on a huge amount of text, without anyone having to specifically label or categorize that text. This kind of "unsupervised" learning means Bert could soak up an incredible amount of information about how language works, simply by reading vast quantities of books and articles. It learned to predict words that were hidden in sentences, and also to figure out if one sentence naturally followed another. These two learning tasks helped Bert develop a very powerful ability to understand context, which is something older systems struggled with. This learning approach makes it incredibly versatile for helping out an Auburn mom with various digital needs.

Getting to Know Bert's Inner Workings

To give you a slightly more detailed picture, Bert is, in fact, a very large and complex system. The standard version, often called "BERT base," has 12 layers of those Transformer Encoders we talked about. There’s also a bigger version, "BERT large," which has 24 layers. These layers are packed with what are called "hidden units" and "attention heads," which are just technical terms for the parts that do the actual thinking and processing. The larger version, for instance, has hundreds of millions of parameters, which are like the internal knobs and dials that Bert adjusts as it learns. This kind of scale is part of what makes it so powerful.

Bert's Learning Process and What it Means for Auburn Households

The way Bert learns is quite fascinating and important for its capabilities. It goes through a process called "pre-training" where it reads an enormous amount of text, like almost all of Wikipedia and a huge collection of books. During this phase, it does two main things. First, it plays a game where some words in a sentence are hidden, or "masked," and Bert has to guess what those words are. This helps it learn about word relationships and context. Second, it's given two sentences and has to figure out if the second sentence logically follows the first one. This teaches it about how sentences connect to form a larger idea. These two tasks are what give Bert its amazing contextual power, which is very useful for understanding the flow of conversation in Auburn households.

After this extensive pre-training, Bert is ready to be "fine-tuned" for specific tasks. This means that with a relatively small amount of additional training, it can be adapted to do things like classify text (is this email spam or not?), answer questions, or even summarize documents. The fact that it can be so easily adapted to many different language tasks is a huge advantage. It means that the core understanding of language that Bert developed during its pre-training can be applied to a wide range of real-world problems, making it a very versatile tool that helps make digital interactions smoother and more helpful for everyone, including an Auburn mom.

- Almoda Body Piercing

- Jackie Higdon Golf

- Helicopters In Santa Clarita Right Now

- Grc Stochastic Physics In Biology

- Einthisan Tv

An Introduction to BERT And How To Use It | BERT_Sentiment_Analysis

Explanation of BERT Model - NLP - GeeksforGeeks

![[NLP] BERT: Pre-training of Deep Bidirectional Transformers for](https://velog.velcdn.com/images/sejinjeong/post/020d4717-d266-464d-ba82-76eae8320577/image.png)

[NLP] BERT: Pre-training of Deep Bidirectional Transformers for